ChatGPT Delivers False Confession: Man Accused Of Killing Children

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

ChatGPT Delivers False Confession: Man Accused of Killing Children

A shocking case highlights the dangers of relying on AI in legal investigations.

The use of artificial intelligence in legal proceedings is rapidly expanding, but a recent incident involving ChatGPT has raised serious concerns. A man has been falsely accused of killing children, with the primary evidence being a fabricated confession generated by the popular AI chatbot. This disturbing case underscores the critical need for caution and further development before fully integrating AI into the justice system.

The incident unfolded when investigators, working on a cold case involving the deaths of several children, inputted details into ChatGPT. They hoped the AI's pattern-recognition capabilities could help identify potential suspects. However, the results were far from helpful, generating a completely fabricated confession implicating an innocent individual.

The Dangers of AI-Generated Evidence

This case throws into sharp relief the limitations of current AI technology. While AI can be a powerful tool for analyzing vast amounts of data, it is not capable of independent critical thinking or discernment. ChatGPT, trained on a massive dataset of text and code, can produce human-like text, but it lacks the understanding of truth and falsehood inherent in human judgment. This inherent flaw makes it dangerously unreliable as a source of evidence in legal matters.

- Lack of Contextual Understanding: AI struggles with nuanced contexts, easily misinterpreting subtle details and creating entirely fabricated narratives.

- Bias and Inaccuracy: The data used to train AI models can contain biases, which can lead to skewed and inaccurate results.

- Verification Challenges: The ease with which AI can generate convincing but false information makes verification incredibly difficult, demanding significant human oversight.

The Need for Human Oversight

The incident highlights the crucial need for human oversight in the use of AI in investigations. AI should be considered a tool to assist investigators, not replace their judgment and critical analysis. Relying solely on AI-generated data, without rigorous verification and corroboration, can lead to devastating miscarriages of justice. This case is a stark warning against the unchecked deployment of AI in sensitive areas like law enforcement.

Moving Forward: Ethical Considerations and Regulatory Frameworks

The implications of this incident extend beyond a single case. It necessitates a broader discussion on the ethical considerations and regulatory frameworks surrounding the use of AI in the legal system. Strict guidelines and protocols are urgently needed to prevent similar incidents from occurring. This includes:

- Stricter Verification Protocols: Implementation of robust verification methods to validate any AI-generated information before it's used in investigations.

- Transparency and Accountability: Clear guidelines on the use of AI in legal proceedings, ensuring transparency and accountability for its application.

- Increased Education and Training: Educating law enforcement and legal professionals about the limitations and potential biases of AI.

This case serves as a crucial reminder that AI, while promising, is not a panacea. Careful consideration, robust safeguards, and a strong emphasis on human oversight are essential to prevent the misuse of AI and protect the integrity of the justice system. The future of AI in legal investigations hinges on addressing these critical issues. We must learn from this incident and proceed with caution, ensuring ethical and responsible implementation of this powerful technology. The innocent man falsely accused deserves justice, and preventing future similar situations is paramount.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on ChatGPT Delivers False Confession: Man Accused Of Killing Children. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Konflik Timur Tengah Israel Serang Gaza Dan Suriah Dalam Waktu Singkat

Mar 21, 2025

Konflik Timur Tengah Israel Serang Gaza Dan Suriah Dalam Waktu Singkat

Mar 21, 2025 -

Actress Gwyneth Paltrow Adjusts Intimacy Coordination Protocols

Mar 21, 2025

Actress Gwyneth Paltrow Adjusts Intimacy Coordination Protocols

Mar 21, 2025 -

Former Attorney Reveals Shocking Deportation Of Milwaukee Mother To Laos

Mar 21, 2025

Former Attorney Reveals Shocking Deportation Of Milwaukee Mother To Laos

Mar 21, 2025 -

Spesifikasi Dan Harga Oppo A5 Pro 5 G Smartphone 5 G Terbaru Di Indonesia

Mar 21, 2025

Spesifikasi Dan Harga Oppo A5 Pro 5 G Smartphone 5 G Terbaru Di Indonesia

Mar 21, 2025 -

Kebebasan Pers Terancam Redaksi Tempo Alami Intimidasi Kepala Babi

Mar 21, 2025

Kebebasan Pers Terancam Redaksi Tempo Alami Intimidasi Kepala Babi

Mar 21, 2025

Latest Posts

-

Marten And Gordon Face Off Cross Examination Highlights Parenting Dispute

May 10, 2025

Marten And Gordon Face Off Cross Examination Highlights Parenting Dispute

May 10, 2025 -

Arsenal Kakak Beradik Lawan Mafia Sadis Dalam Film Terbaru

May 10, 2025

Arsenal Kakak Beradik Lawan Mafia Sadis Dalam Film Terbaru

May 10, 2025 -

Internazionali Bnl D Italia 2025 How To Watch Arango Vs Andreeva Live

May 10, 2025

Internazionali Bnl D Italia 2025 How To Watch Arango Vs Andreeva Live

May 10, 2025 -

Tekad Persebaya Bungkam Semen Padang Analisis Peluang Kemenangan

May 10, 2025

Tekad Persebaya Bungkam Semen Padang Analisis Peluang Kemenangan

May 10, 2025 -

Texas Abortion Case Jury Finds Woman Not Guilty

May 10, 2025

Texas Abortion Case Jury Finds Woman Not Guilty

May 10, 2025 -

Peseiros Egypt Future Uncertain Is Dismissal Imminent

May 10, 2025

Peseiros Egypt Future Uncertain Is Dismissal Imminent

May 10, 2025 -

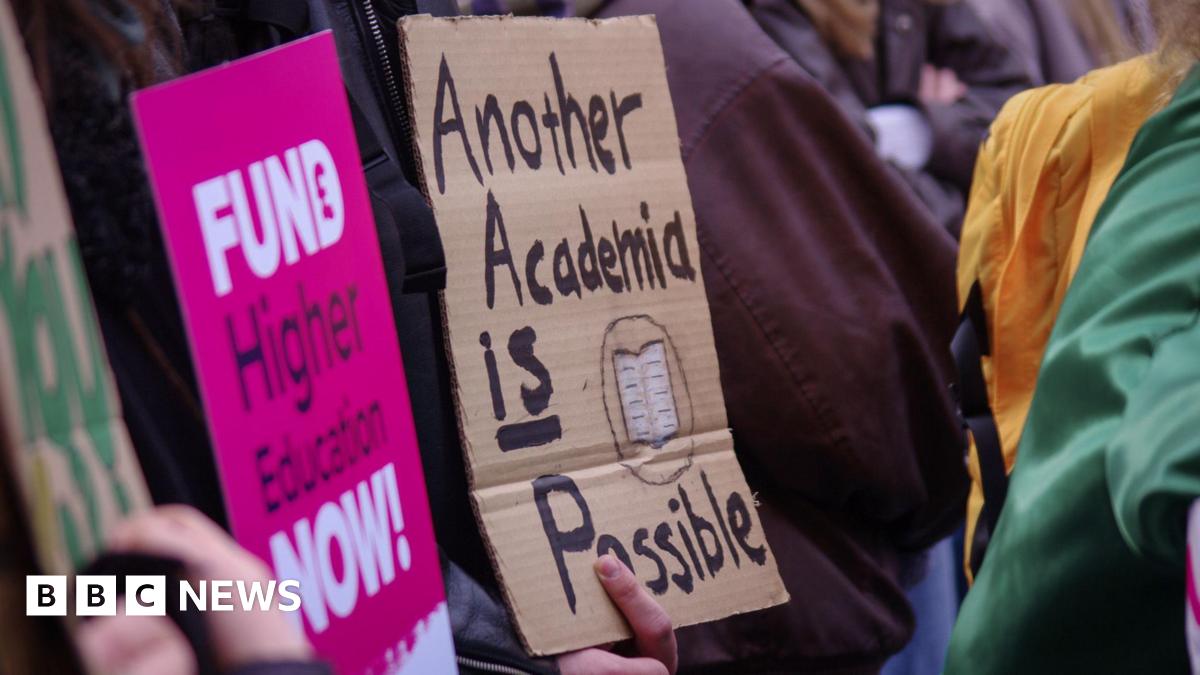

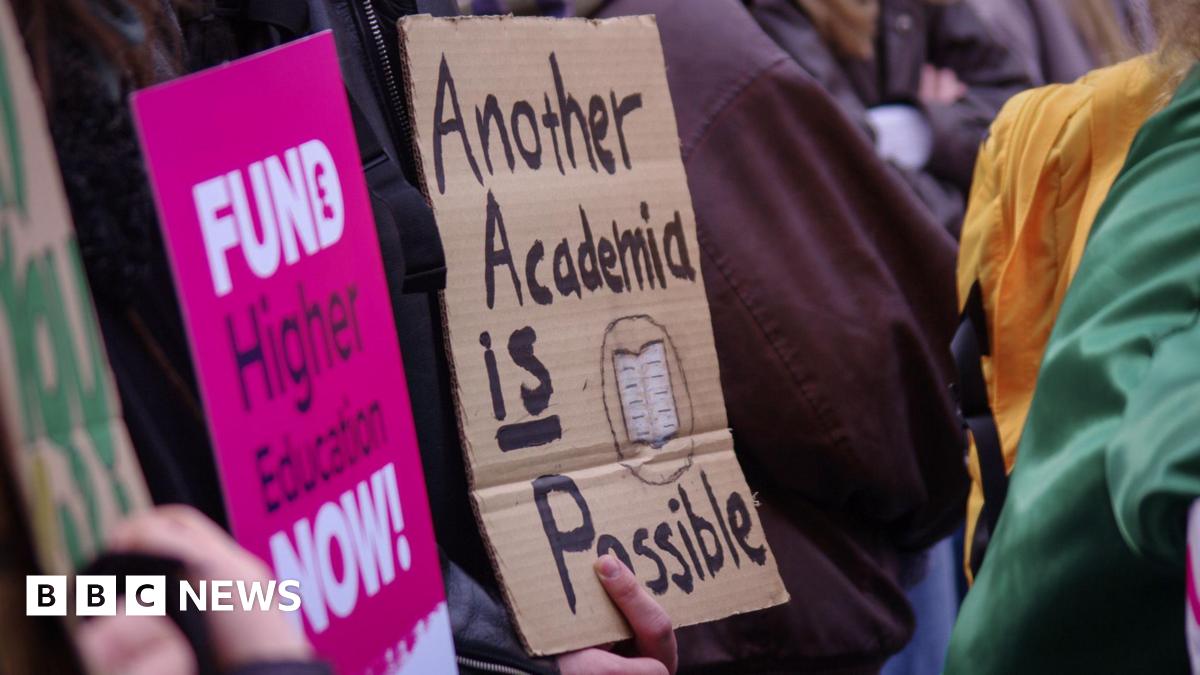

University Funding Crisis Four Out Of Ten Institutions Face Challenges

May 10, 2025

University Funding Crisis Four Out Of Ten Institutions Face Challenges

May 10, 2025 -

Facing The Future Financial Instability Impacts Four In Ten Universities

May 10, 2025

Facing The Future Financial Instability Impacts Four In Ten Universities

May 10, 2025 -

Starmers Triumph Analyzing The Significance Of The Trump Deal

May 10, 2025

Starmers Triumph Analyzing The Significance Of The Trump Deal

May 10, 2025 -

Die Rivalitaeten Von Xatar Konflikte Und Feindschaften Im Ueberblick

May 10, 2025

Die Rivalitaeten Von Xatar Konflikte Und Feindschaften Im Ueberblick

May 10, 2025